Are the iPhone 16 Pro's Spatial Videos Any Better Than The iPhone 15 Pro's?

Announced last year, in September 2023, the iPhone 15 Pro and Pro Max were the first iPhones with the ability – via a promised future software update – to record Spatial videos for viewing on the Vision Pro. These 3D videos were to be recorded using the iPhones' Main and Ultra Wide cameras.

Where We're Coming From: Last Year's iPhones

Greg Joswiak, Apple's SVP of Worldwide Marketing, said during the iPhone 15 Pro's announcement:

"And there's one more unique feature we've created just for the world of spatial computing. With iPhone 15 Pro, you can now capture spatial videos. We use the ultra wide and main cameras together to create a three dimensional video. You can then relive these memories in a magical way on Apple Vision Pro (...) Spatial video will be available later this year."

[video timestamp: 1h 17m 26s]

The ability to record Spatial videos on the iPhone 15 Pro and Pro Max rolled out with the iOS public beta in November 2023, in beta 2 of iOS 17.2.

But it wasn't without its technical challenges and limitations: The iPhone 15 Pro's Main and Ultra Wide cameras were significantly mismatched: The Main camera had a resolution of 48 Megapixels with an aperture of ƒ/1.78 (good!) while the Ultra Wide had a 12 Megapixel sensor with an aperture of ƒ/2.2 (not as good!) – a quarter of the resolution and more than half a stop worse, in aperture terms – so lacking the ability to let in as much light, and capture as much detail, as the Main camera.

Enter the iPhones 16

On the non-Pro iPhones 16 this year, there's a 48 Megapixel Main camera...

...and a 12MP Ultra Wide...

Speaking about the iPhone 16 (regular and Plus), Piyush Pratik, iPhone Product Manager, said at this September's event:

iPhone 16 also has a phenomenal 48 megapixel main camera that offers incredible flexibility.

[video timestamp 59m 44s]

and we are pushing things further with spatial capture on iPhone 16. The new camera design enables us to get stereoscopic information from the ultra wide and fusion [sic][1] cameras for capturing spatial videos. And for the very first time on iPhone, we can also capture spatial photos. It's so powerful to preserve your precious moments with spatial photos and videos, like a kid's footsteps or a family vacation, and relive them for years to come on Apple Vision Pro. iPhone 16 also makes audio quality better...

[video timestamp 1h 02m 17s]

[1]Yes, they referred to the "Main" camera of the non-Pro iPhone 16 as the "Fusion" camera here.

Notice, though, that Apple is emphasising the emotional aspect of being able to "preserve your precious moments" and "relive them for years to come on Apple Vision Pro" – they aren't highlighting a technical aspect like the iPhone 16's ability to capture "incredibly detailed" Spatial videos and photos, as they do when talking about non-Spatial photos, for example. Keep this in mind.

Meanwhile, the upgrades to camera hardware continue apace with the iPhone 16 Pro lineup, on which they've given us, in their words, "three new cameras."

Let's focus on the Main (now Fusion, in the Pro phones) and Ultra Wide cameras, the latter of which was the most in need of an upgrade when it comes to capturing Spatial videos.

Apple's Della Huff, Manager of Camera and Photos Product Marketing, talking about the Pro phones, says:

We know that high resolution matters in a variety of scenes, so we are introducing a new 48 megapixel ultra wide camera. It has a new quad pixel sensor for high resolution shots with autofocus. You can capture so much more detail in wider angle shots, or when you want to get up close for a gorgeous macro shot.

[video timestamp: 1h 21m 09s]

Again, note "shots" – so Apple is emphasising the new Ultra Wide sensor's advantages for photos, not videos.

Here are the specs of the iPhone 16 Pro/Max Fusion and Ultra Wide cameras:

And the written specs from Apple's website:

48MP Fusion: 24mm, ƒ/1.78 aperture, second‑generation sensor‑shift optical image stabilisation, 100% Focus Pixels, support for super‑high‑resolution photos (24MP and 48MP)

48MP Ultra Wide: 13mm, ƒ/2.2 aperture and 120° field of view, Hybrid Focus Pixels, super-high-resolution photos (48MP)

[emphasis added]

OK, the iPhone 16 Pro's cameras used for Spatial video now both have 48 Megapixel sensors. So does this give us similar video quality for both eyes in Spatial videos?

Many people hoped this would be the case: The new 48MP sensor in the Ultra Wide would "match" that of the Fusion camera, so would give us higher quality Spatial video for the left eye (when the iPhone records Spatial video in landscape with the lenses along the top edge).

But the number of pixels on the sensor is not the only factor at play here. There's also:

- the aperture of the Ultra Wide lens (unchanged from last year, still "only" ƒ/2.2 vs the Fusion camera's ƒ/1.78);

- the physical size of the sensor's pixels which are much smaller (1.4 μm on the Ultra Wide vs. the Fusion camera's 2.44 μm); and

- the focal length which is of course very different (13mm vs the Fusion camera's 24mm).

Given the same exposure time for both sensors when recording Spatial video...

- The smaller aperture means less light reaches the Ultra Wide sensor.

- The smaller pixels mean even less light falls on each pixel.

- The smaller (wider) focal length means each pixel "captures" light from a larger area, resulting in less detail.

Overall, the Ultra Wide sensor is still going to struggle to match the quality of each frame captured by the wider-aperture, bigger-pixel, narrower-field-of-view Fusion camera when they're working simultaneously to capture Spatial videos.

Enough numbers and tech specs! How about a real world test?

There's still hope that there will be an upgrade to Spatial video quality with the new phones though, right?

To put the new hardware to the test, I conducted a couple of tests.

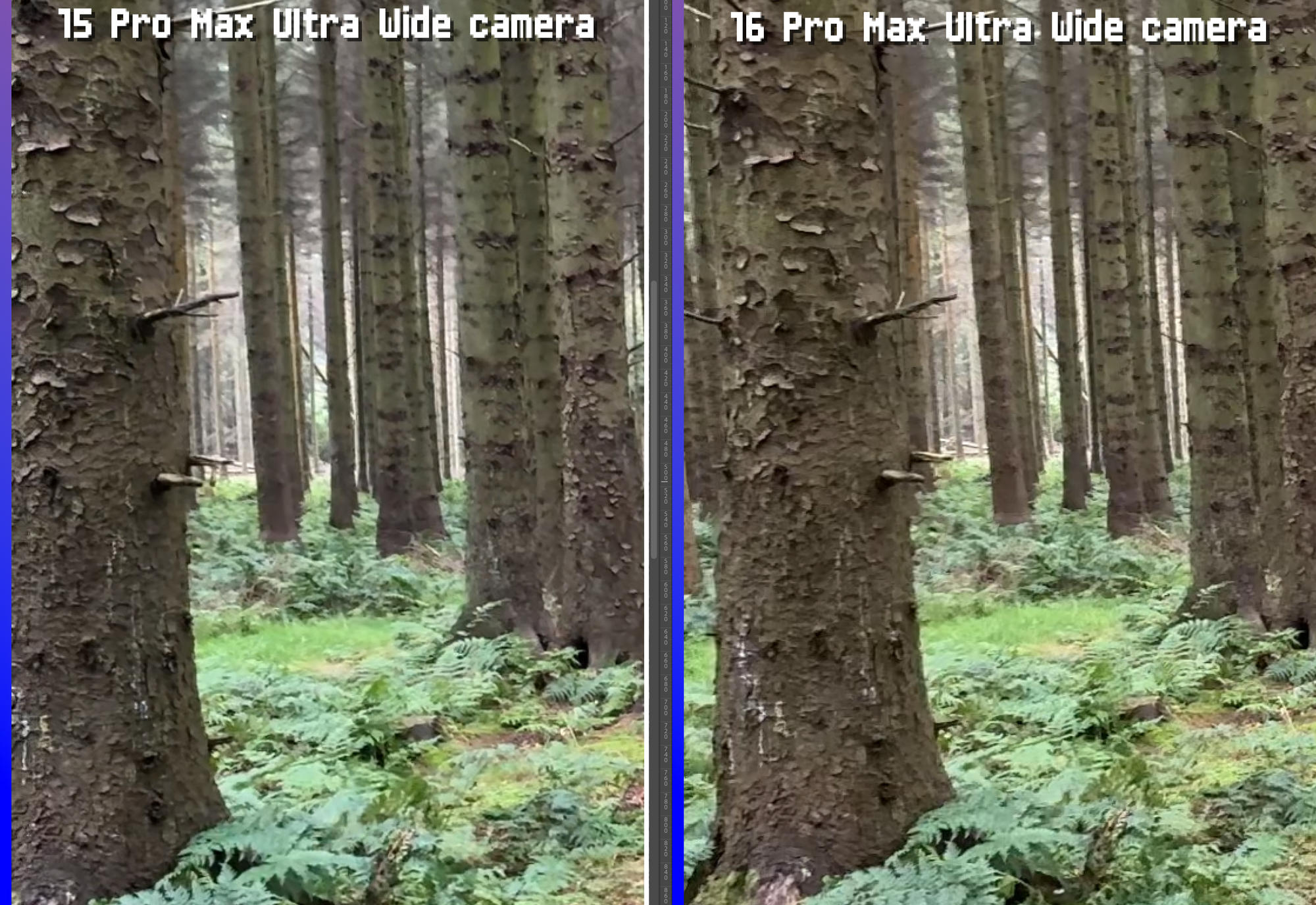

Test One: Outdoors

At the weekend, I ventured into a forest on an overcast day here in Scotland, with both the 16 Pro Max and 15 Pro Max.

It wasn't California-bright in this sheltered location, but I didn't get the camera app's warning while shooting Spatial video clips that "more light is required" so can assume both iPhones were happy with the amount of Scottish daylight available.

I used a gimbal to keep the shots as smooth as possible, and took the same shot on both the iPhone 16 Pro Max and 15 Pro Max, using the same setup, just a minute or so apart.

I also took the frame grabs you'll see below from a still section of each video for the best chance of maximum sharpness – basically ensuring both iPhones had every chance to capture crisp, detailed frames for me to inspect.

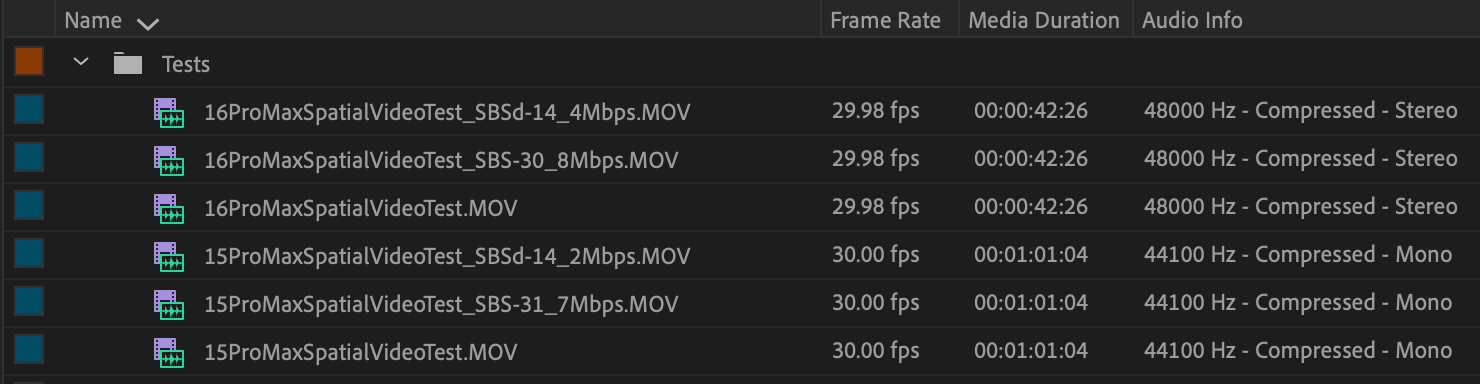

When I got home, I transferred the Spatial video files onto my MacBook Pro via the Photos app and re-encoded them from their MV-HEVC format – which Apple uses for Spatial videos – into Full Side-by-Side (SBS) format using Mike Swanson's excellent spatial command-line utility.

[For anyone interested in some more technical information, the videos were re-encoded to the SBS format with spatial's quality setting manually set to 0.6 which output SBS files with a bitrate of ~31Mbps, vs. spatial's default quality of 0.5, or ~14Mbps.]

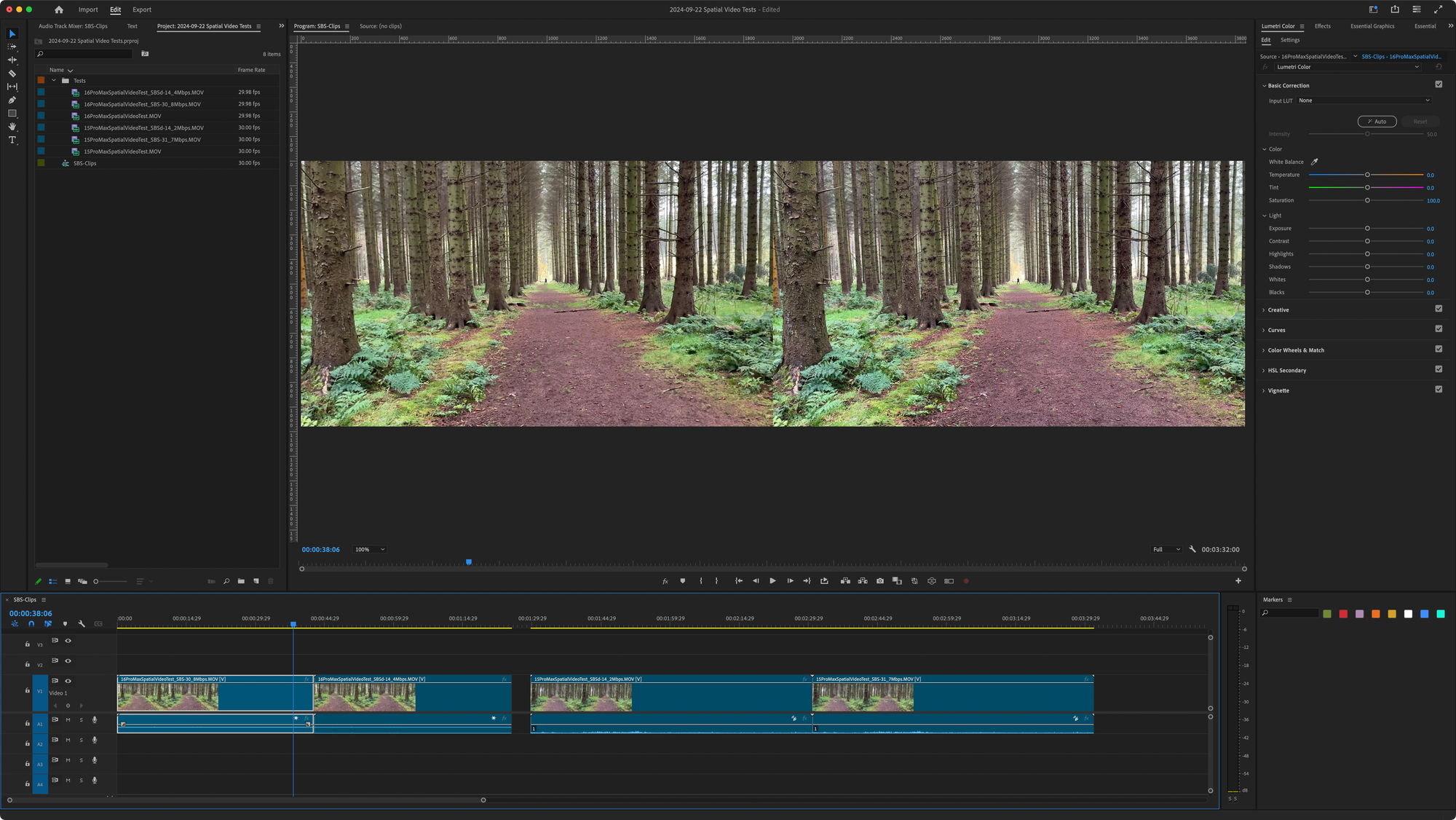

I then brought the SBS videos into Premiere Pro.

Aside: It's maybe worth noting at this point that the iPhone 16 Pro's Spatial videos are recorded at a frame rate of 29.98 fps and with 48,000Hz audio, versus the iPhone 15 Pro's 30.00 fps with 44,100Hz audio:

In Premiere Pro, I was able to export frame grabs (in uncompressed PNG format) which I could then bring into Photoshop for closer inspection.

iPhone 15 Pro Max Spatial Video

Let's start with the Spatial video taken using last year's iPhone 15 Pro Max.

Looking at both left and right eyes at 100% magnification, cropped to the left-most parts of each eye's view, there's a clear difference (click image to zoom in):

The left eye/Ultra Wide camera's frame is quite soft compared with the right eye/Main camera's view. Details, particularly in the background, are smudged or lost altogether.

But the cameras on the iPhones 15 Pro are mismatched: The Ultra Wide is only 12 Megapixels! We knew this going in! Let's move on to this year's upgraded Ultra Wide camera!

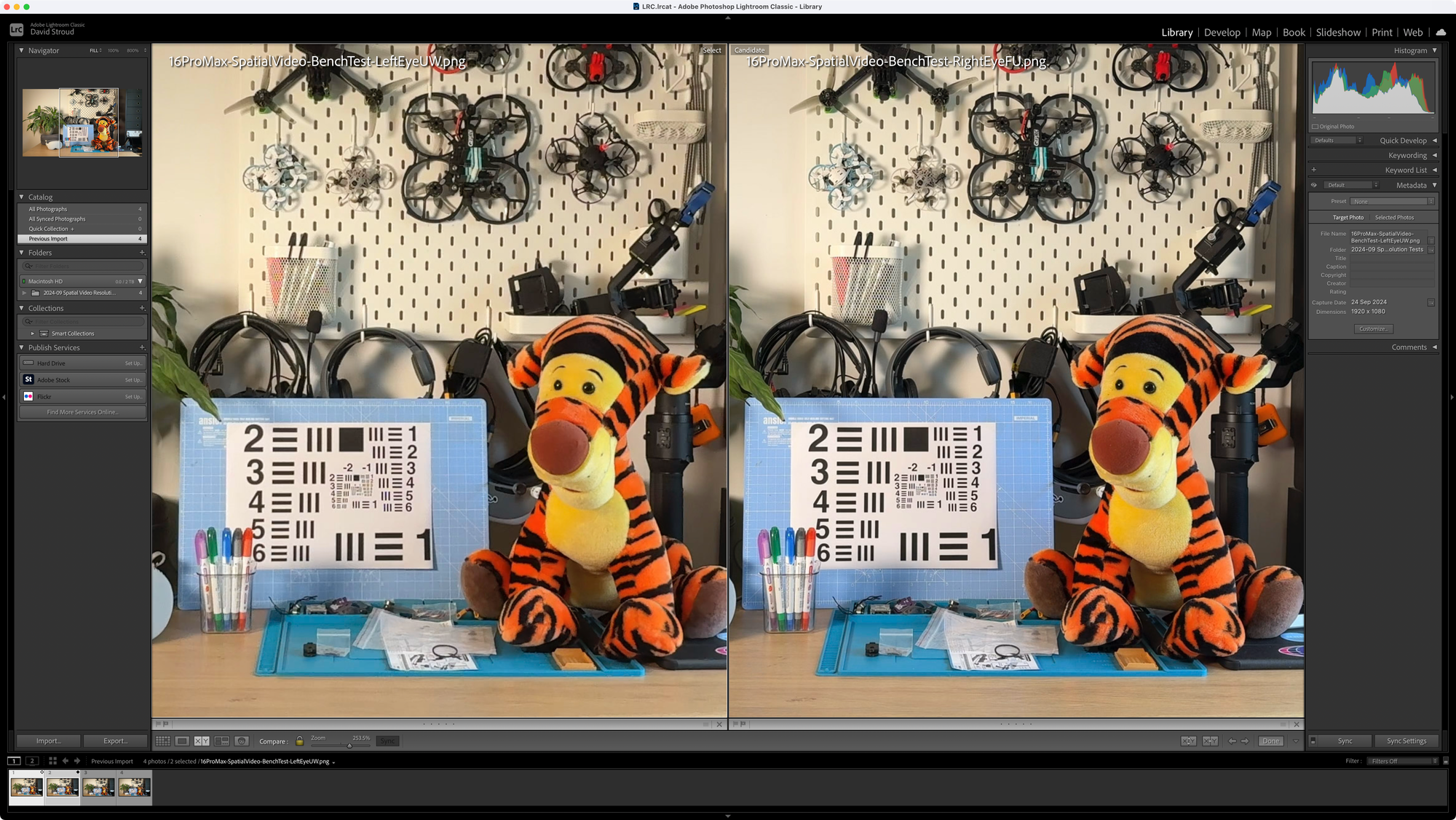

iPhone 16 Pro Max Spatial Video

OK. Ready? Here's the same shot, taken with the iPhone 16 Pro Max and its new 48 Megapixel Ultra Wide sensor for the left eye...

"Wait a minute," you might be thinking (as I did at first)... "you're clearly sleep-deprived. You've exported the same frame grabs again." Well, I might be... 🥱 ...but I haven't.

To my eye, and after pixel-peeping at 5x magnification, there's practically zero improvement in quality (again, feel free to click/zoom and inspect yourself).

If I'm being very generous, perhaps the background detail is a little better preserved with the 16 Pro – the trees in the background are a touch less "oil painty" – but this is really clutching at straws (5x magnified straws!)

Test Two: Indoors

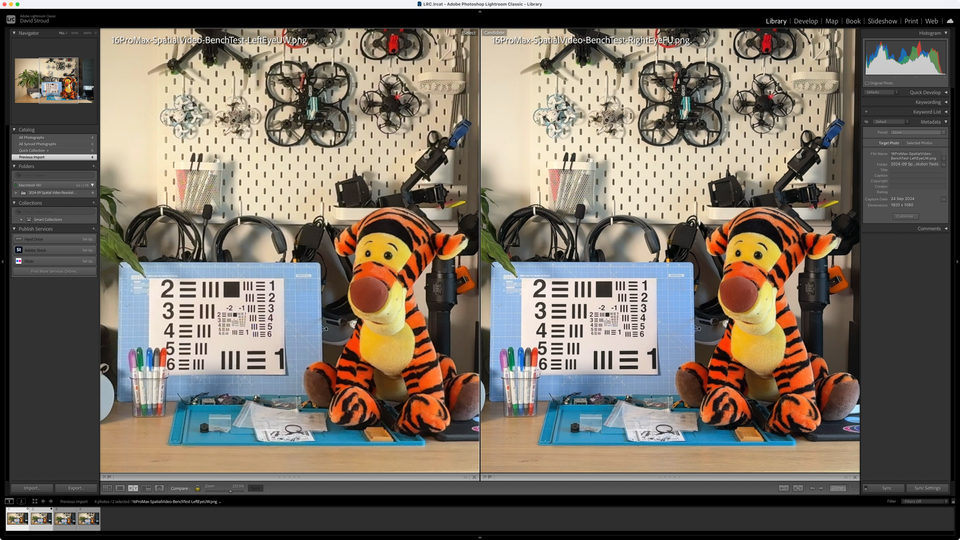

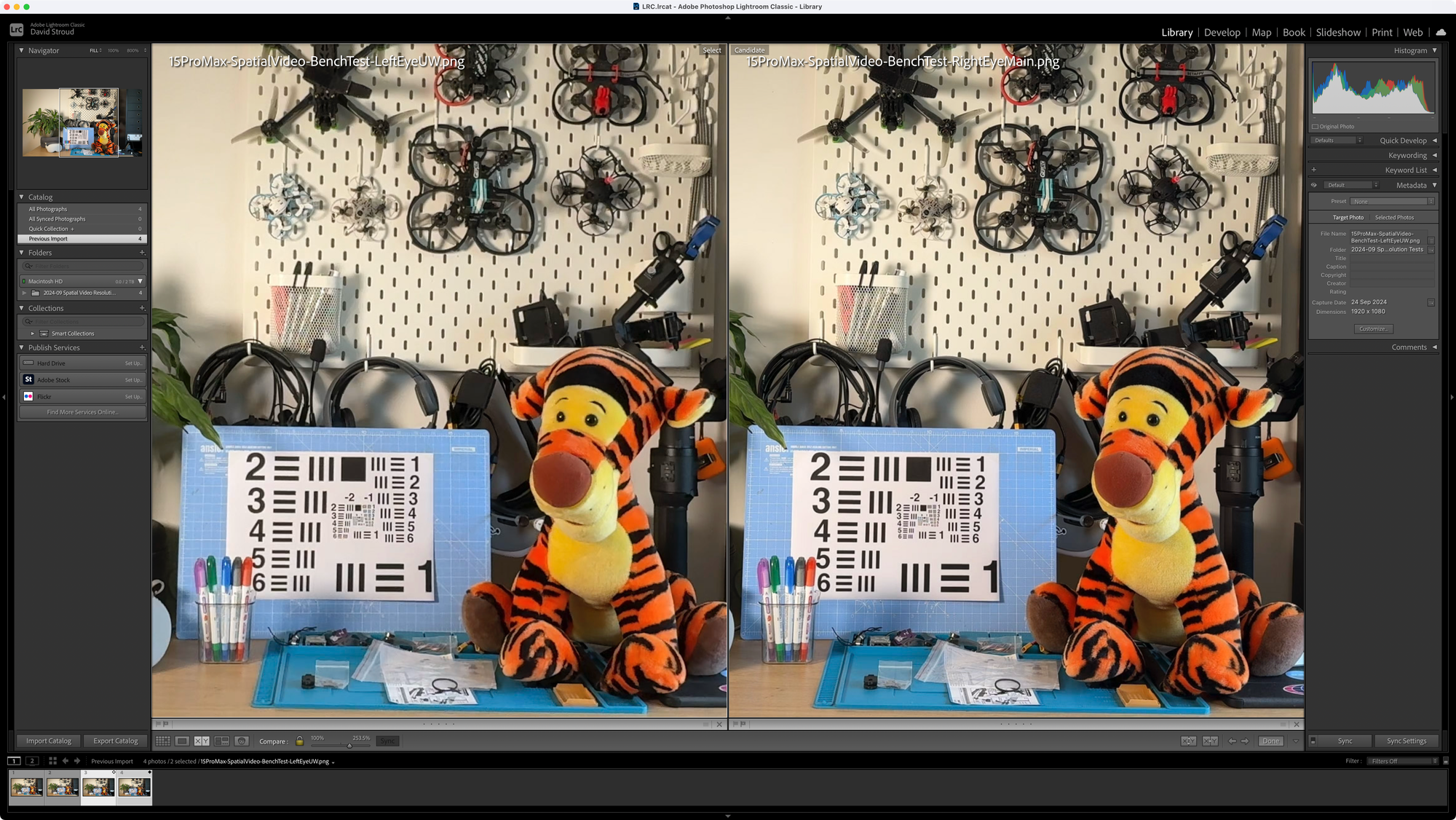

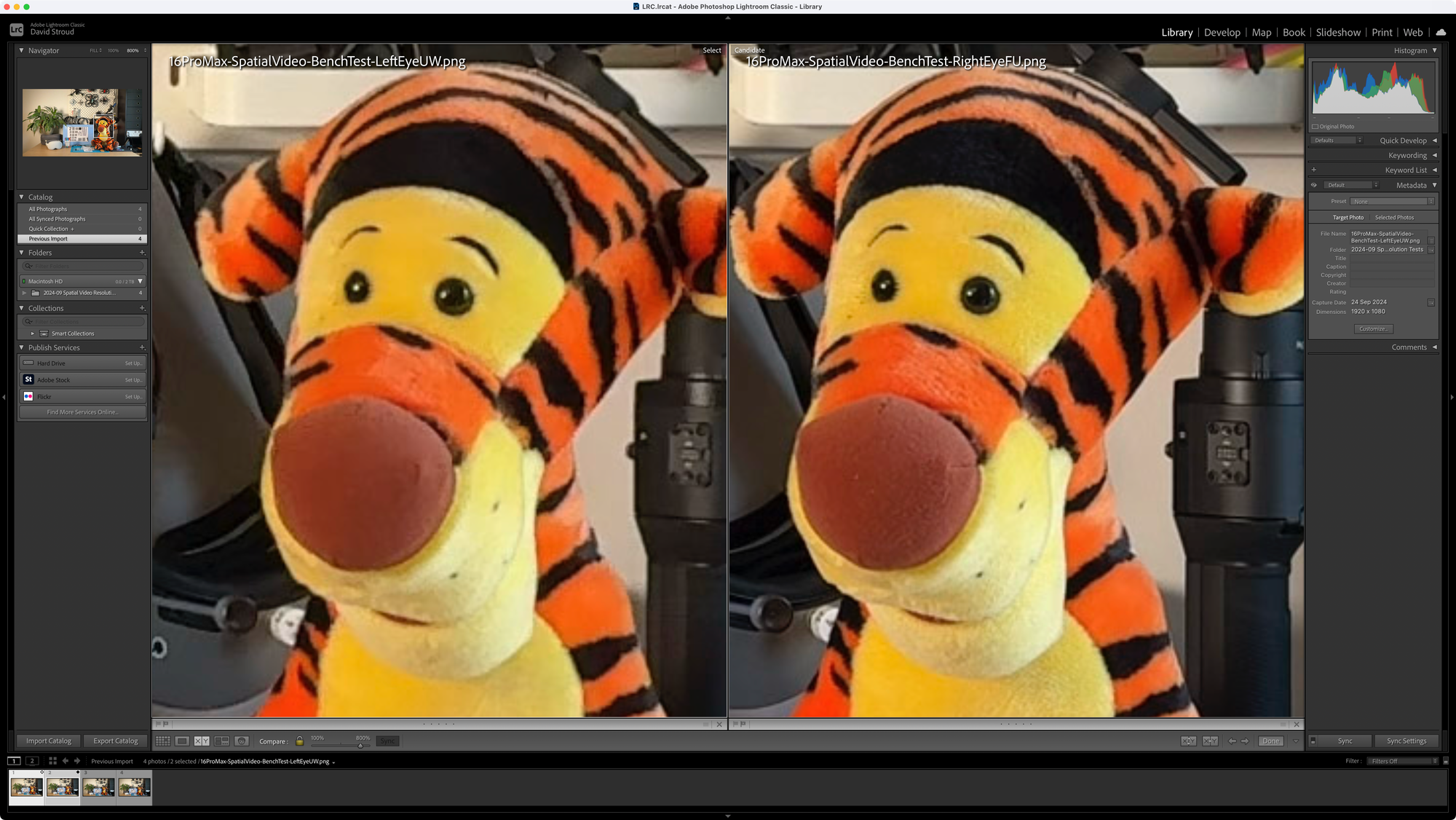

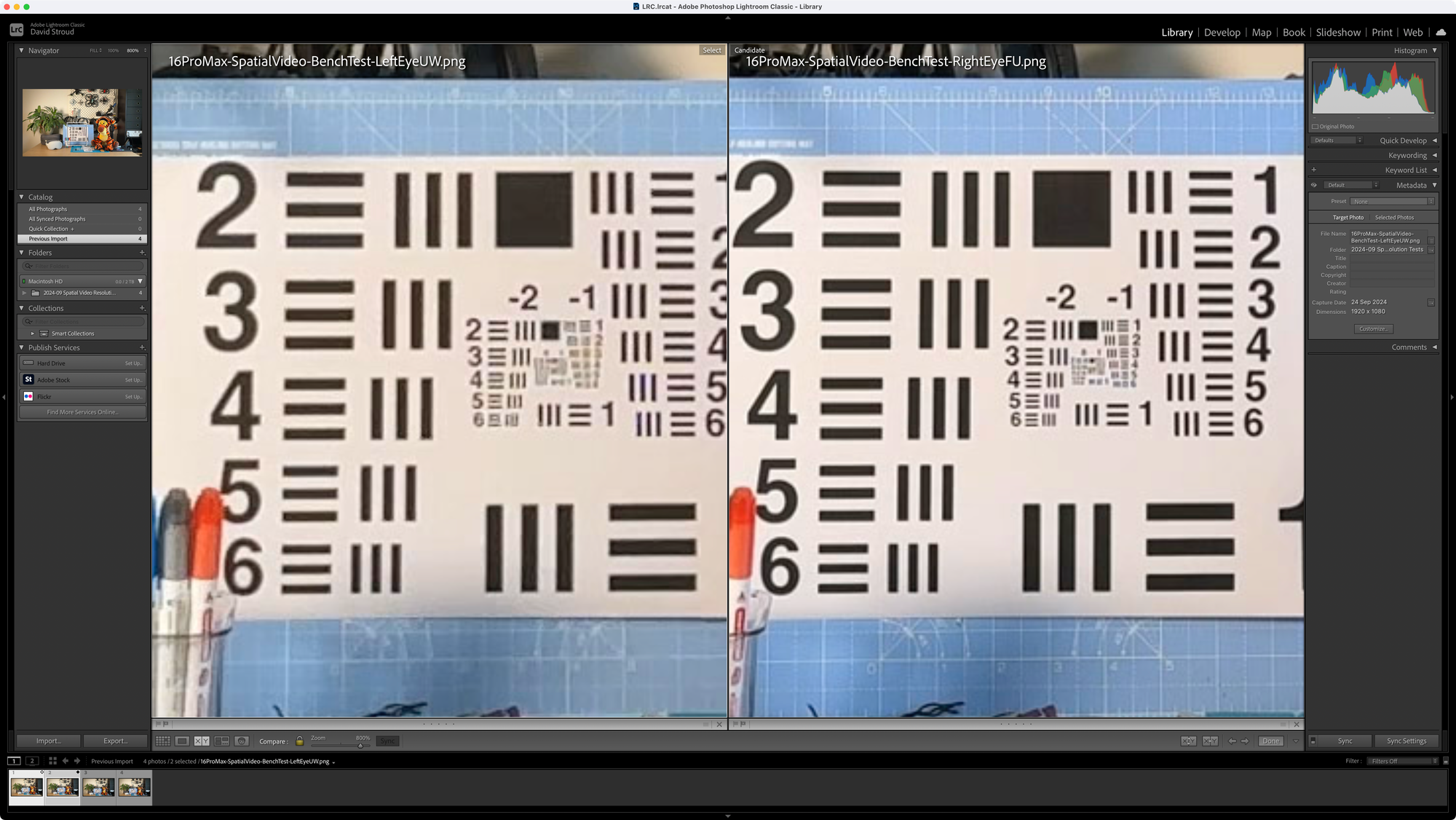

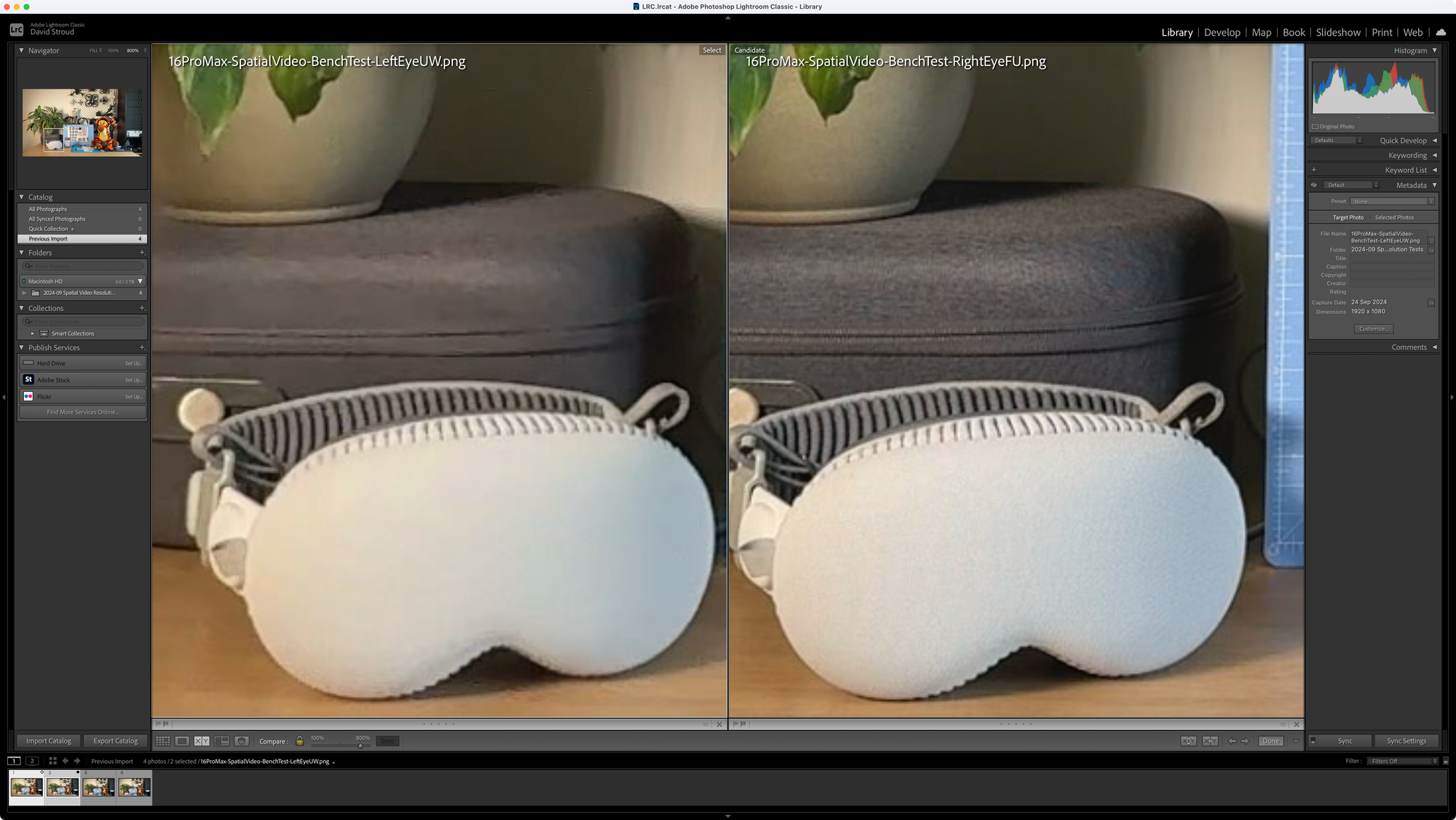

Heading back inside, and to controlled "studio lighting" I performed another test, with both iPhones static, recording a scene featuring a collection of drones, the Vision Pro headset itself, a copy of the USAF 1951 Resolution Test Chart, and Tigger. I needed to brighten my mood somehow.

I performed the same process:

- recorded some short (~10 second) Spatial video clips with each iPhone

- exported them via Photos.app onto my MacBook Pro

- re-encoded them with the 'spatial' utility to Side-by-Side files at 0.6 quality (this time getting ~11Mbps for each clip)

- brought the SBS files into Premiere

- exported an uncompressed PNG frame grab

- brought those into Photoshop

- cropped and saved the left and right eye images

- and this time, imported them into Lightroom for closer comparison.

Lightroom enabled me to put left and right eye images side-by-side, zoomed in so I could pan them in unison more easily than in Photoshop.

Here's How The iPhone 15 Pro Max Fares

Zooming in to different parts of the image...

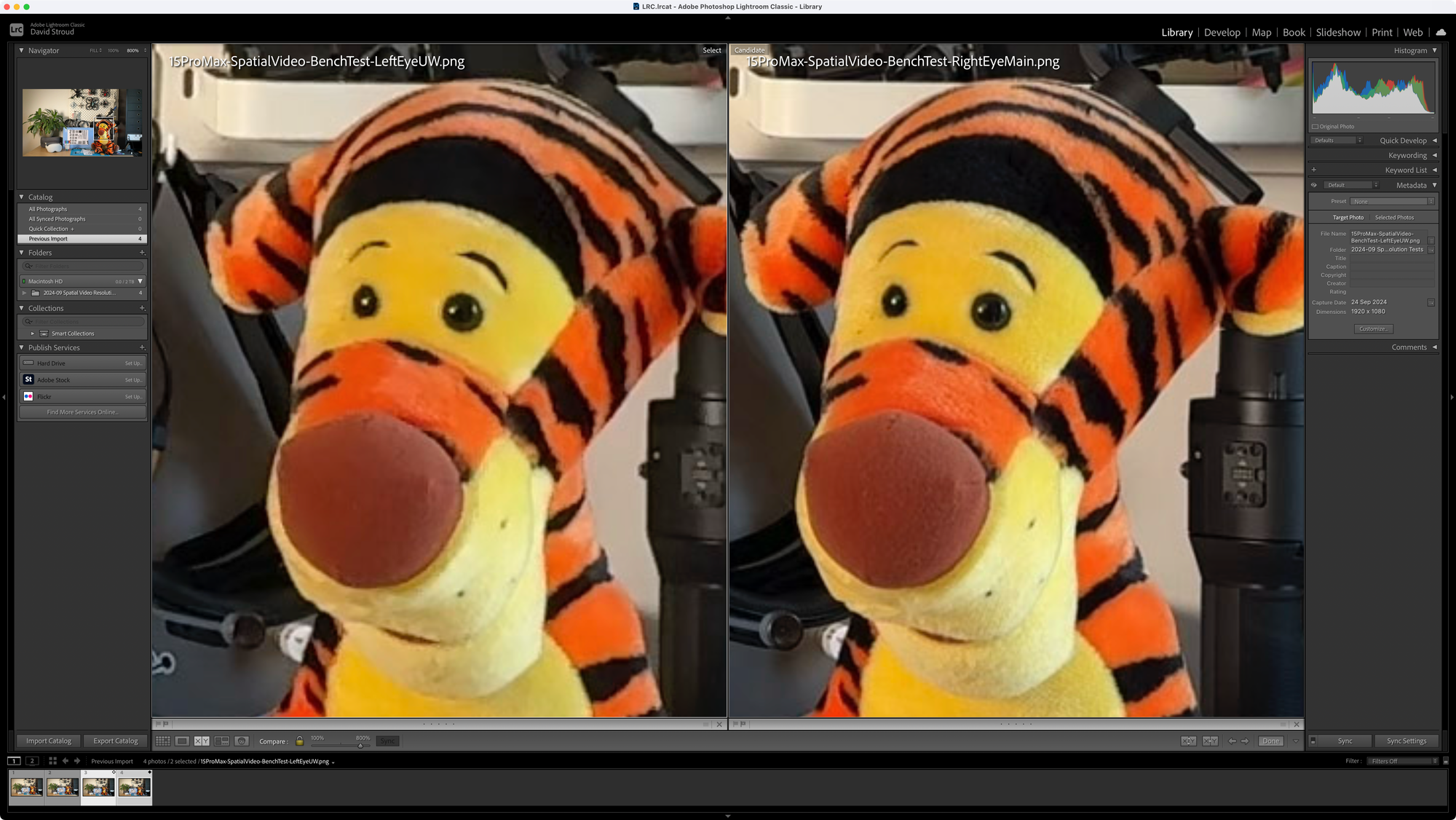

First, while this doesn't look too different when pixel-peeping – now at 800% magnification – the Ultra Wide has lost detail in Tigger's fur, particularly noticeable on his nose (there's a sentence I never thought I'd type)...

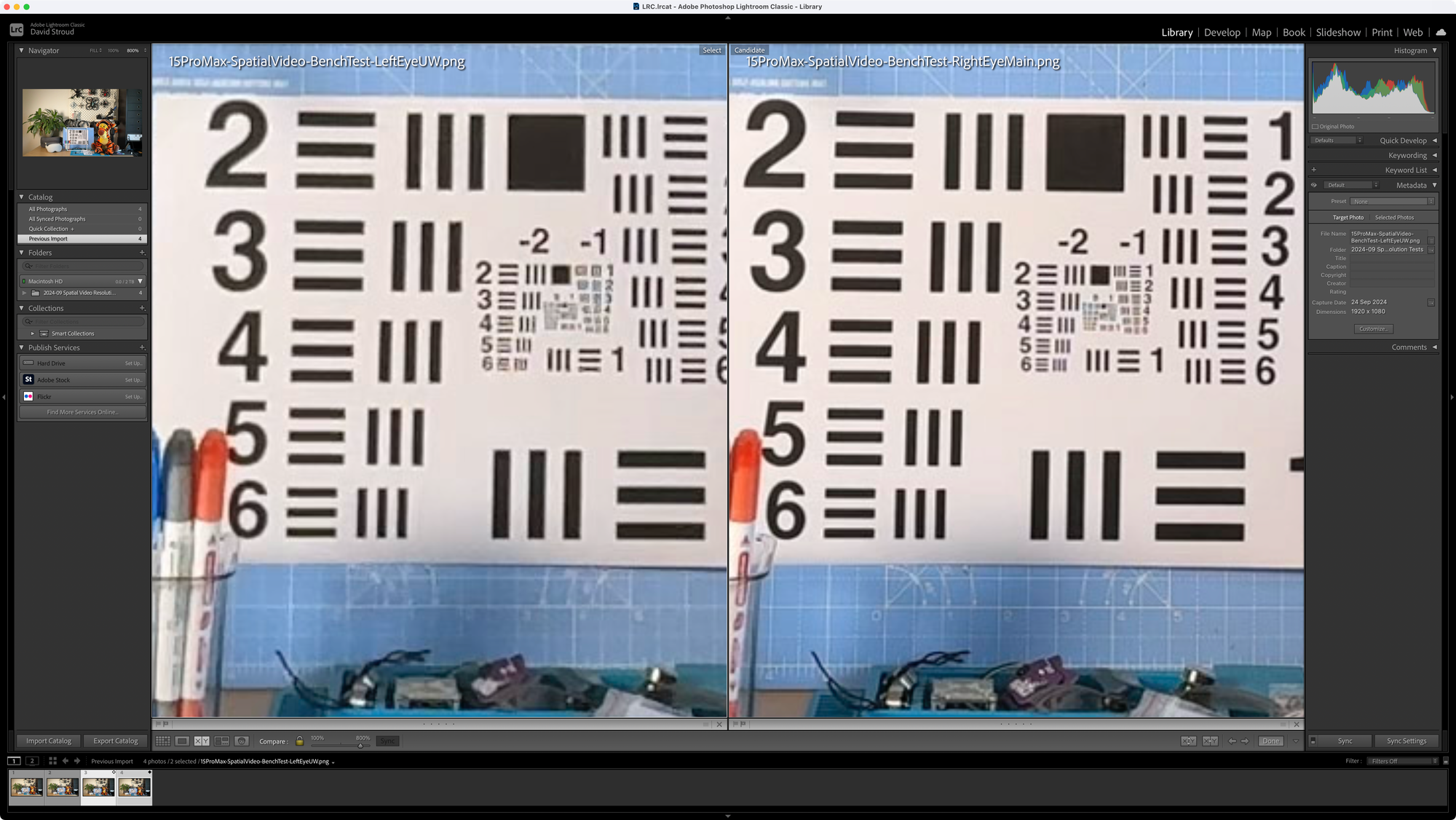

The USAF Resolution Test Chart looks... OK, but the smaller numbers in the centre are muddier, and the loss of detail in the blue silicone mat for the left eye is clearly noticeable...

Finally, it's perhaps this section of the image that betrays the Ultra Wide sensor's processing the most: The left eye's image is almost completely devoid of detail – the fabric on the Vision Pro's cover is completely flattened, as are the details on the case in the background.

And Now The iPhone 16 Pro Max

In short: Very similar results.

Perhaps, if we're being overly generous, there's a hair more detail in Tigger's fur?...

Is there marginally better detail in the blue mat?...

There's certainly no real improvement in the texture on the Vision Pro cover and its case (again, click to zoom in)...

What These Tests Have Shown

The iPhone 16 Pro (Max)'s "new" Ultra Wide sensor, when used for Spatial videos, produces virtually identical results to the iPhone 15 Pro (Max)'s Ultra Wide.

There is no marked improvement in Spatial video quality.

I, like many others, had hoped for at least a noticeable improvement in detail for the "left" eye/camera's view in Spatial videos captured with the newest iPhones and their upgraded 48MP Ultra Wide sensor.

Were we wrong to expect it? Given the other factors discussed – aperture, sensor pixel size, and field of view, which haven't changed from the iPhones 15 to the iPhones 16 – yes. We were wrong to expect an improvement.

To be fair, Apple hasn't claimed – either during their September announcement video, or in their written tech specs – that Spatial video quality would be any better on the iPhones 16 Pro as a result of the new Ultra Wide sensor: They only talked about the new 4x resolution of the sensor, and have only mentioned the new Ultra Wide camera's ability to take "super-high-resolution photos" (i.e. not videos, let alone Spatial videos).

Just like a regular digital camera: It's not all about the Megapixels.

Going Down The Focal Length Rabbit Hole

How Much Of The iPhone 16 Pro's New 48MP Ultra Wide Sensor Is Actually Being Used For Spatial Videos?

Let's consider physics for a moment.

When capturing Spatial videos, which need left and right eye images to match in terms of their field of view, the 13mm Ultra Wide camera's view has to be cropped in to match that of the 24mm Fusion camera.

Using an online Field of View calculator, we can see that a 24mm lens will cover a "field" – or area – 8 metres away of 96m2; and at 30m away an area of 1,350m2.

A 13mm lens will capture an area 8 metres away of 327m2 and at 30m away an area of 4,601m2.

The ratio of these areas is: 327/96 = 3.406 and 4,601/1,350 = 3.408.

In other words, the area covered by the iPhone's Ultra Wide lens is physically 3.4 times larger, so to output an image looking at the same exact region as the 24mm lens, it has to crop in and only use 1/3.4 – a bit more than a quarter – of its pixels – i.e. (48/3.4) 14.118 Megapixels.

By the same logic, was the iPhone 15 Pro / Max only using (12/3.4) 3.529 Megapixels of its Ultra Wide sensor?

Maybe? The frame size of Spatial video is only 1,920 x 1,080 per eye which is... 2,073,600 ...just over 2 Megapixels.

But then why does the new iPhone's cropped 14.1 Megapixels produce a virtually indistinguishable result from the cropped 3.5 Megapixels in the iPhone 15 Pro? Why are we not getting more detail?

Are the so-called "quad-pixels" in the "new" Ultra Wide sensor only addressed individually in photography mode – and when shooting video, are those "quad-pixels" actually being treated as a single pixel, maybe in part because they're so small, and that's the only way to get cropped video that's in any way useable for Spatial video at all?

If there's anyone out there who knows more about the technicalities here, I'd love to hear from you (you can find me over on Mastodon).

What Next?

Will a future iOS update improve the quality of Spatial video frames captured by the new Ultra Wide sensor in the iPhones 16?

Will we need to wait for a whole new future iPhone with another lens, so that we can have two perfectly matched "Fusion" cameras (with matching aperture, sensor pixel size, and field of view) in addition to the Ultra Wide and the Telephoto on the Pro iPhones, for example, to achieve matching high quality for both eyes in Spatial videos?

I can't wait to find out, and to get better quality Spatial videos out of these ridiculously advanced devices we carry around with us every day.